Identifying woke employees at scale

Exploiting prediction error to mechanically measure leftism

In order to take control of an institution using AI you must figure out where to start.

Upon entering a new institution for the first time you are surrounded by people you depend on to get the job done. Some of them are apolitical and just want to get paid, others are your allies and still others see themselves as the “resistance”. They have no interest in the goals of the organization or achieving mutual success for the group. Their goal is to abuse their job role’s resources or influence to advance their far left social agenda, even if that seriously damages the organization itself (e.g. Bud Light, Scientific American, Jaguar, Disney and so on).

It would be convenient if you could quantify the “wokeness level” of each employee via a quick and lightweight process. Employees would complete it as part of their regular mandated training, and in turn this would allow for targeted executive actions to be taken against those with the greatest likelihood to cause trouble.

Such a test would need the following properties:

Non-physical. Can be completed via a computer. E.g. using fMRI scanners is out.

Ideally, does not require changes to the law.

Not easily spoofed by those wishing to evade detection.

The third requirement seems challenging. If you don’t meet it then the moment the outcomes are used to fire people or deny promotions any option to misrepresent will be immediately exploited.

Does such a test really exist? Remarkably, it does. We’ll start with some neuroscience theory, go on to describe the test (which has already been developed and trialled), and explore alternatives like biometrics. We’ll finish up with a short discussion of the morality of mass cancelling woke employees, which is one possible way to use the resulting data.

The Mask Illusion

You’re probably familiar with optical illusions like this one:

The unease it generates can be explained by the predictive coding hypothesis. Our brains generate continuous predictions about the true state of the world based on sensory input combined with experience. Having two eyes plus lots of experience lets the brain generate depth predictions despite us not having any senses for measuring distance directly. This happens subconsciously and when it goes wrong the conscious emotion we feel is surprise.

Surprise is adaptive - it lets us know that we don’t fully understand the environment and should be more careful, lest we get eaten by predators. Surprise also lets us learn, which is why we can get better at tasks like catching a ball. With enough experience the brain learns to subconsciously generate predictions of where the ball is going in 3D space by utilizing 2D images.

Prediction in both artificial and biological neural networks is “autoregressive”: predictions build on earlier predictions. Such compounding predictions are unreliable and errors can quickly accumulate until the overall prediction becomes garbage. A good way to visualize this is watching what happens when an artificial neural network is asked to repeatedly predict the next frame in a video:

The results get weird … fast. Events quickly lose their connection with reality.

The mistakes in the video aren’t random. They occur because there’s no way for the AI to go backwards. Having committed to a frame it has to build on it, even if that frame doesn’t quite make sense. Like the children’s game where everyone sits in a circle and adds a word to a shared story, once the model makes a bad prediction it has to double down on the error, producing ever more errors as it struggles to remain consistent with earlier failures. For example: the model correctly predicts that a firefighter should wear fluorescent yellow visibility strips, but then incorrectly predicts that everyone should have such strips. Even as the action becomes less and less connected to the original scene, yellow strips keep appearing in ever odder places on people’s bodies as it struggles to remain consistent with that mistake.

We also experience these same kinds of prediction failures when our senses are disconnected during sleep. With nothing continuously resetting our brain’s predictions back to reality the errors compound. Impossible things keep happening yet seem natural.

Wokeness theory

Our brains don’t only generate predictions about physical space. That’s just one of the easiest kinds of prediction to explore in a lab. We’re also constantly generating longer-range predictions: when will I get hungry? Is this dark cave dangerous? Why did the dog wag its tail when I fed it? Predictions can fall anywhere on the spectrum from fully unconscious (depth perception) to fully conscious (who do I think will win the election?). There’s also some that straddle the boundary and these surface as gut feelings, or intuition. We’re aware we’re making a prediction but aren’t sure how.

One of the hardest things to predict is other people’s reactions, because it requires building a model of how they think based on a lot of indirect evidence and observations. Understanding how people you don’t regularly interact with think is the hardest of all.

Extremely inaccurate predictions are a prominent feature of the woke worldview:

Someone voted for X, so we can predict they are racist/sexist/etc (and not, say, concerned with economic factors).

A company did Y, so we can predict it was motivated by profit (and not, say, just being incompetent).

The Gazans are oppressed by the Israelis, so we can predict they share the same views as us on gay rights.

If our opponent wins the election we can predict he’ll throw us all in labor camps.

Wokeness can be cast as a form of compounding prediction error. It starts with an incorrect prediction: a mundane thing seems bad, so people who do/support that thing are predicted to be generically evil in every way, and in turn they predict such people are dangerous to be around. Survival instincts kick in and they seek to avoid contact. This deprives them of any chance for a reality check, so their brains make more and more predictions based primarily on their own previous ideas rather than observed evidence. The errors compound and pretty quickly they believe themselves to be engaged in a heroic history-defining battle against “literal” Nazis.

Making the problem worse is social media: it’s hard to go back and admit you were wrong when faced with new evidence, especially if that new evidence is a personal experience nobody knows you had. Just like the video generation AI you can’t go back in time and change your own predictions, lest you be ridiculed for inconsistency and foolishness.

Compounding prediction error can cause a wide range of mental illnesses beyond just wokeness. A key feature of BPD is difficulty modelling other people’s beliefs about the sufferer. For example, it’s common for them to believe that other people who seem to be nice are actually Machiavellian manipulators who are masking their true beliefs. Phobias are a similar example in which relatively harmless things are predicted to be extremely dangerous, so they’re avoided and the sufferer never gets evidence that could force their predictions back to reality. The treatment is to convince the sufferer to incrementally expose themselves to the feared thing until their bad predictions are overridden.

At this point it would be reasonable to object that if prediction error is a part of being human and can cause multiple different disorders, then there’s nothing particularly woke about it and the right is just as susceptible as the left. But that isn’t so. Whilst everyone finds modelling other people’s beliefs hard, the key error that puts someone on a path to wokeness is when they explain other people’s beliefs as caused by evil. The right tend to think the left are naive; the left tend to think the right are deliberately malicious. Although there are of course exceptions on both sides, this is the general pattern.

You can work with naive people. You can listen to them and talk to them, and in fact you must talk to them because otherwise how would they become wiser? This belief can be seen in the habit of right leaning or libertarian people to read left wing media even if they disagree with it, and to post comments in left leaning forums. The reverse almost never happens: leftists don’t wander into right wing spaces and start arguing with the people they find there.

This happens because our culture is very clear that you can’t fix evil. Hollywood movies are noticeably short on cases where the antagonist was diverted from his wicked ways by a well crafted argument. The lesson woke people have drummed into their head from childhood is simple: once your heart goes black, there’s no way back. Evil people can’t be reformed but they can be dangerous, and worse, their wicked ways can spread between people. It’s logical then that a good person must both fight the non-woke in any way possible whilst simultaneously avoiding contact with them. And this is what we see them do.

Exploiting prediction error for detection

If this hypothesis is correct it would provide a robust way to detect wokeness even amongst people trying to hide it. All you have to do is ask people to make predictions about what a hypothetical conservative would answer to a large set of questions about moral issues, and compare the answers to ground truth polling data.

This sort of test has been done. In Graham2012 a large sample of over 2,000 people demonstrated that conservatives do indeed make much more accurate predictions about the left than leftists make about conservatives. The study design was simple. The sample was divided into two halves. The first half was asked to answer the questions directly, and they were also asked to place themselves on the political spectrum. The second half was asked to place themselves on the spectrum, and then answer the questions as if they were someone with the opposite of their own views.

The study has its limits: the “moral foundations questionnaire” on which it’s built is arguably unconcerned with some of the core differences driving disagreement (e.g. it ignores concern for truth), and it didn’t let people self-declare as libertarian. But nonetheless it showed large and clear differences. Specifically it showed that the left have much more exaggerated beliefs about the right than the other way around. That is, leftists think everyone on the right has very extreme views, whereas the right are much better calibrated about the left’s views.

This implies that woke people can be detected by asking them to answer questions as if they are conservatives. The woke will give much more extreme answers than is accurate, whereas the non-woke will give answers similar to the ground truth data.

Given the enormous anti-conservative bias in academic social science, it will not surprise you to learn that there have been very few follow-up studies. Nonetheless, a test based on this concept would have the following advantages:

Easy to administer. It can just be a multiple choice web form.

It generates a score, not just a binary yes/no outcome. Different jobs can have differently chosen maximum wokeness scores.

Easily randomized. There are thousands of possible things you could ask people to predict, and LLMs can be used to continuously generate variants of the same questions. Newly generated questionnaires can be deployed automatically to large survey platforms to establish a newly calibrated ground truth.

“Cheating” by memorizing known good answers is fine, as if wokeness is in fact caused by prediction error then forcing the woke to learn a better understanding of the non-woke should make them less extreme - solving the problem it’s designed to detect in the first place.

Biometrics

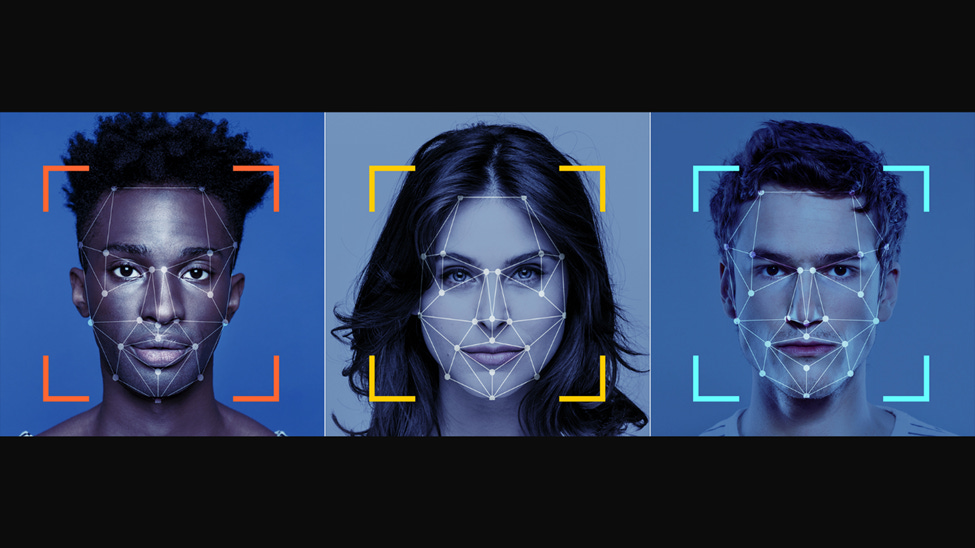

There’s evidence that political ideology may be linked to biology beyond the obvious confounds of gender, age and race. AI can detect political orientation from photos for example, and it’s reported that MRI scanners can reliably detect leftism from the size of different brain areas.

These findings are intriguing but put to one side here, because:

It’s unclear to what extent these findings are real and will replicate. Whilst the AI face finding came out of computer science rather than social science and seems to use a robust methodology, there’s a long history of model effectiveness being accidentally exaggerated due to data split issues, overfitting and other problems.

The error rate is still quite high.

AI models are claimed to detect many other things from just a photo, e.g. sexual orientation or wealth even when faces are blurred. In some cases this has led to incorrect conclusions, e.g. that a theocratic regime could detect gay people by using mass surveillance, when in reality the model is detecting aspects like photo quality or smartphone brand, which wouldn’t be the same when generalizing out of the training set distribution.

More importantly it’s not clear that it makes sense to view wokeness as a biological phenomenon, even if it may correlate to some extent with physical aspects like gender and mental health. People can change their ideology quickly and in response to clearly social factors, so it’s hard to see how that can happen if the underlying cause is biological.

On the morality of mass cancellation

The most obvious way to use data on employee wokeness is to simply fire the wokest people. In parts of the world where employees are protected by left wing laws against “unfair dismissal” this may be difficult to pull off, but as the left has amply demonstrated, such laws are typically enforced by regulatory bodies that can simply be captured and then ordered to ignore certain kinds of case (e.g. sexism against men, dismissal for being conservative). Firings can easily be justified on the same vague grounds used to justify firings of the non-woke, e.g. that they create a hostile work environment.

But would it be right? Would it be just?

In the weeks following the Trump assassination attempt the right wing blogosphere was rocked by debates over whether it was moral to cancel random workers who publicly wished the assassin had succeeded, as Libs Of TikTok successfully managed in a long series of cases. No such debate happens on the left, because why would you hesitate to distance yourself and your group from evil people? Examples of such counter-cancelling essays include Scott Alexander, Librarian of Celaeno, and Andrew Doyle (a.k.a. Titania McGrath).

This blog is concerned with tactics, and sides with those who observe that in a war (cultural or hot) people who sit around debating whether to stoop to the level of their opponents tend to lose. A tactic can only be ruled out in war when all sides have agreed to not use it, and that only happens after the side that deployed it the most aggressively has been defeated. That isn’t going to happen anytime soon so the debate is academic - the left will continue to aggressively purge anyone who isn’t sufficiently loyal, people will mostly stop wishing Thomas Crooks had succeeded and the right wing “should we cancel?” arguments will fizzle out.

Regardless, the primary reason to mass fire woke employees is not even based on their morality to begin with. Woke is correlated with broke because woke employees systematically make decisions that are good for their ideology first and their employer second. Woke employees can’t be trusted with institutional power because they will immediately abuse it, and that’s a problem independent of any specific moral code. Institutions need workers who will advance the goals of the institution, and that’s just not compatible with viewing yourself as a soldier in a worldwide struggle against evil. A competent institution must find ways to detect and exclude such employees, otherwise it will fail at its goals.

Other techniques for identifying and neutralizing woke employees will be the topic of future posts.

I think this is a very well-reasoned post that also makes me recoil in horror at its conclusions.

You don't fire someone for their beliefs. End of. You fire people for sabotaging an organisation. Even their beliefs make them statistically more likely to sabotage the organisation, you don't fire them unless and until that sabotage happens.

It's the same reason we don't use racial profiling. Even if black men are statistically more likely to commit crimes, we don't pre-emptively throw them in jail just to be on the safe side.

We aren't woke; we don't see people as a mishmash of categories, we see them as individuals who fail or succeed on their own merits.

How would you recommend keeping woke actors or institutions from applying the same strategies that you’re outlining here against their non-/anti-woke counterparts?

Incidentally, rather than Borderline Personality Disorder, a far better analogue for your argument would be phobias. An initial negative experience is globally generalized in a way that allows the fear to persist by preventing future corrective experiences. The most effective form of treatment is exposure - forcing the individual to contact the feared thing in reality in order to adjust the cognitive model and extinguish the misaligned response.

I share that because your assertions regarding Borderline Personality Disorder are quite wrong and substantially undermine the quality of your argument. The defining characteristics of a *personality disorder* are (1) a stable and pervasive pattern that (2) is extremely difficult to change and (3) causes significant distress or impairment in one or more domains of life - I.e., despite repeated painful corrective feedback from reality, the cognitive models that drive their behavior remain highly resistant to alteration. The etiology of any of the personality disorders is multi-factorial and treatment is intensive, long-term, and costly. Anyone with psychological training is going to immediately flag your premise as wrong and that, at least rhetorically, weakens your argument to them.